A Toronto lawyer, whose licence was already suspended due to his connection to a deadly triple shooting, has been found to have used artificial intelligence in a subsequent appeal, according to reports from the Law Society of Ontario. The case, which intertwines a violent crime with emerging technology, is sending shockwaves through Canada's legal community and prompting urgent discussions about professional ethics.

The Suspension and the Underlying Case

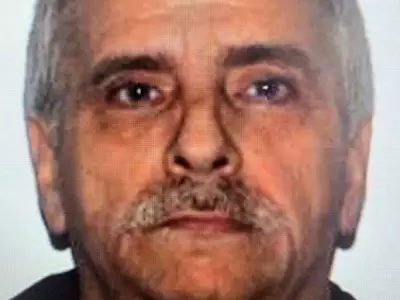

The lawyer, whose identity is known within the profession, had his licence to practise law suspended following his alleged links to a violent incident that resulted in three fatalities. The specifics of the lawyer's involvement in the triple shooting case remain under investigation by authorities. However, the initial suspension by the Law Society was a direct consequence of this serious association, highlighting the regulatory body's mandate to protect public interest.

While under this suspension, the lawyer proceeded to file an appeal. It was during this appeal process that the use of artificial intelligence was discovered. The AI tool was reportedly employed to assist in drafting or researching legal arguments for the appeal. The Law Society of Ontario, upon learning of this, has taken further disciplinary action, though the precise nature of the additional sanctions related to the AI use has not been fully detailed in public records.

Ethical Implications of AI in Legal Practice

This incident throws a spotlight on the rapidly evolving and largely unregulated frontier of AI within the justice system. While AI tools can offer efficiency in legal research and document drafting, they also pose significant risks. These include the potential for generating inaccurate or fabricated case law—a phenomenon known as "AI hallucination"—and the dilution of lawyer accountability.

The case of the suspended Toronto lawyer raises a critical question: does the use of AI by a lawyer already under scrutiny for misconduct compound the ethical breach? Legal experts argue that it underscores the need for clear professional guidelines. Lawyers have a fundamental duty of competence and candour to the court. Relying on unverified AI-generated content could violate these core obligations, especially if the output is not rigorously vetted by a qualified legal professional.

Broader Consequences for the Legal Profession

The Law Society of Ontario's response to this situation is being closely watched. It sets a precedent for how legal regulators across Canada will handle the intersection of professional misconduct and the use of new technologies. This case is likely to accelerate ongoing discussions about mandatory training for lawyers on the ethical use of AI, potential disclosure requirements when AI is used in filings, and the development of specific rules of professional conduct governing these tools.

Furthermore, the incident may influence judicial attitudes. Courts may begin to demand transparency about the use of AI in submitted materials, much like they require disclosure of human legal research assistants. The Toronto-based case, with its grave underlying facts, serves as a stark warning to the profession about the potential perils of embracing powerful technology without a robust ethical framework.

As the legal community grapples with this modern dilemma, the core principles of the profession—integrity, accountability, and service to the court—remain paramount. This case demonstrates that those principles must now be actively defended in the digital age.